Pensieve

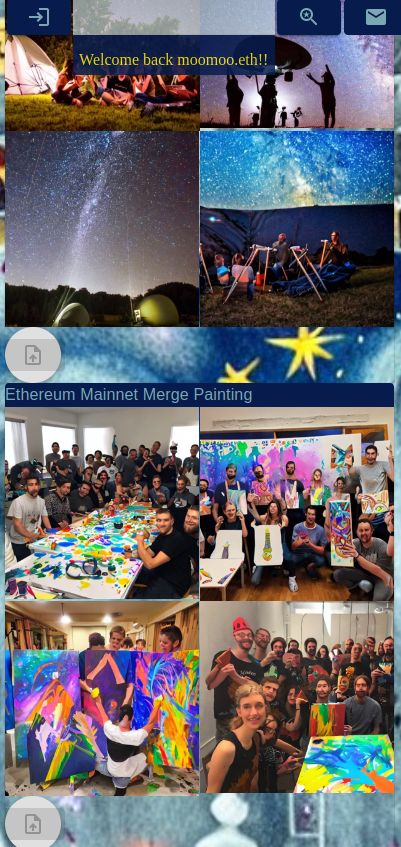

Pensieve is a decentralized file storage to recreate your those moments in your memories and share with others. As you upload these memories, you can also choose to take part in the documentation of the HISTORY OF APES (HOMO SAPIENS).

Pensieve

Created At

Winner of

🥇 Beryx — Best use of Beryx API

🥇 Bacalhau — Best Use

🥇 DataverseOS — Best Use

Project Description

Some of us take too many photos and videos of our lifes that our phone is constantly out of storage. Some of these are great photos and some are blurry duplicates of an exciting moments that are junk to human eyes nows; however, potentially useful data in the future for machine learning and AI computer vision algorithms in reconsctructing immersive moments of your memories. How about a dApp to save them ALL - save all those memories like Dumbledore's pensieve....

Pensieve is a decentralized file storage to recreate your those moments in your memories and share with others. As you upload these memories, you can also choose to take part in the documentation of the HISTORY OF APES (HOMO SAPIENS).

Memory Token Actions on FVM

- Anyone can add an Event by registering a POAP.

- POAP NFT grants par<ticipants access to read and add data (CID) to that Event.

- Non-participants, who want to read data about an Event, can propose deals where POAP holders of that Event can vote to decide whether to accept them.

- Deal reward will get a split amount the POAP holders who have contributed (e.g. uploaded photos) to reconstruct that shared memory.

- (NOT DONE YET) People can vote on significance of the event and the events with significance score above a threshold will form our decentralised "HISTORY OF APES".

- (NOT DONE YET) Anyone can challenge to say a piece of data is fake. If they are wrong, they lose their stake. If they are right, they claim their stake and the data uploader's penalty.

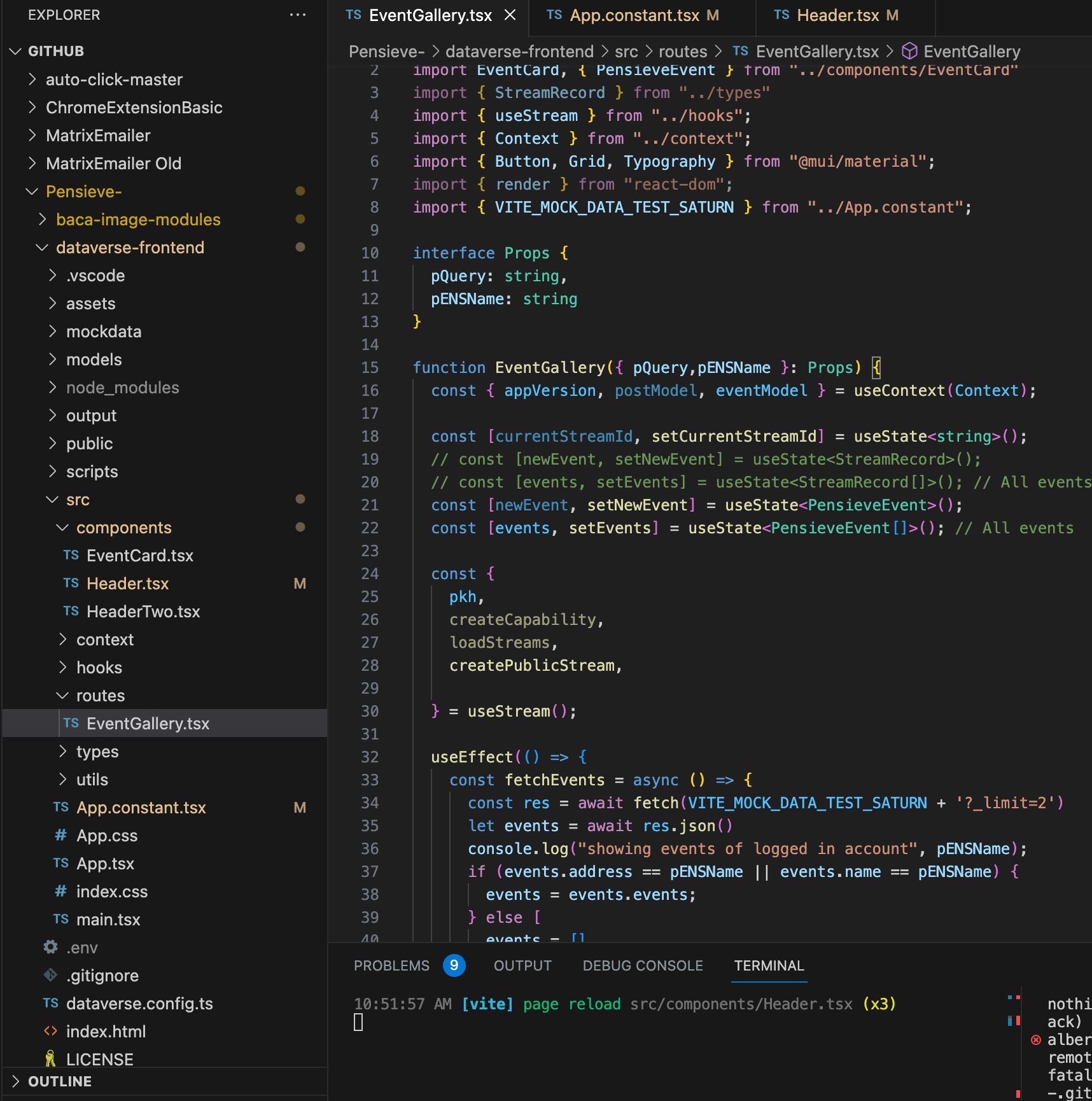

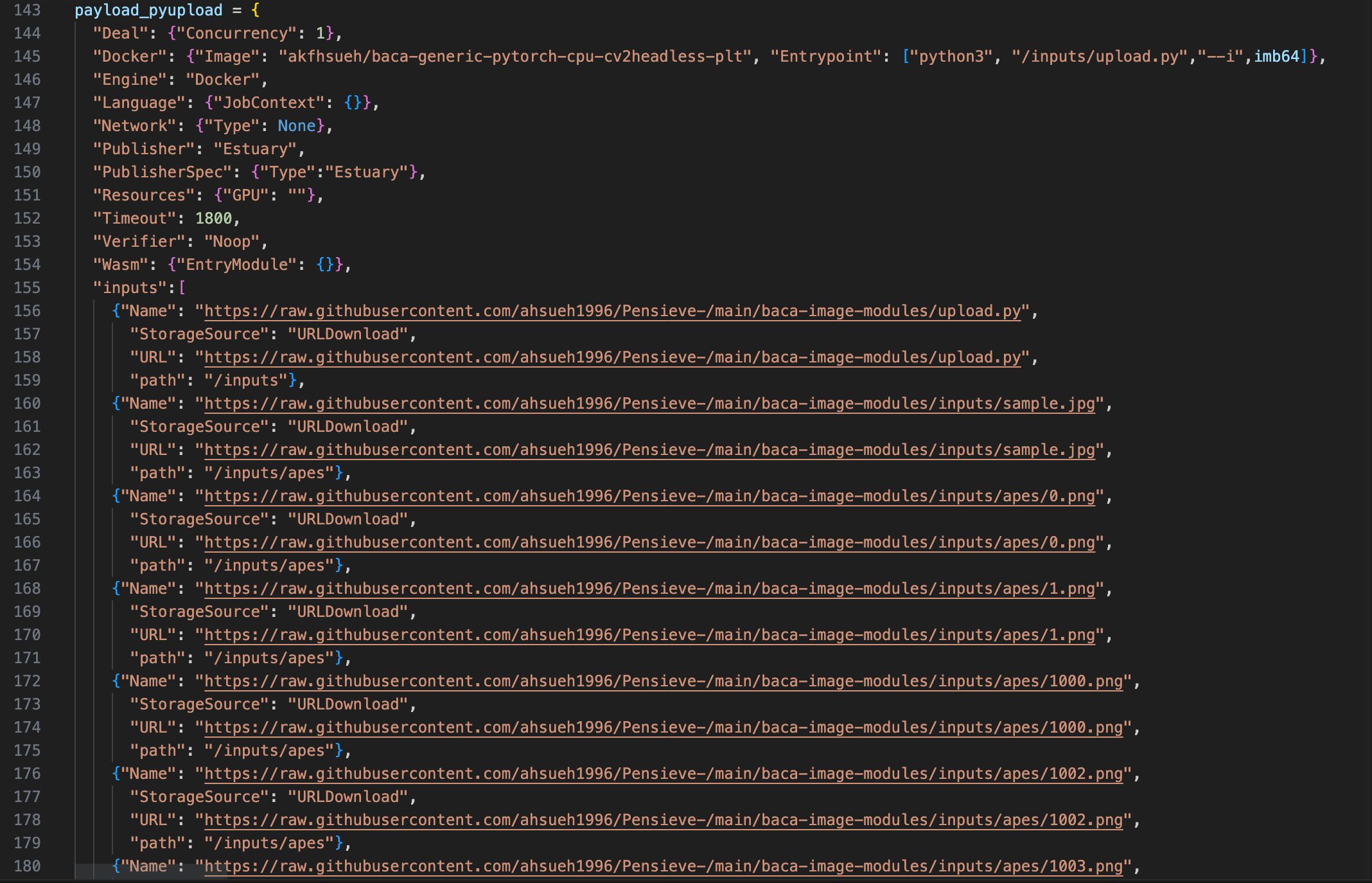

Data Flow and Technologies

- The user connects on Filecoin network (calibration testnet) to the dApp through the Dataverse Wallet (their address will get resolved to their ENS name on Ethereum (goerli) testnet).

- This grants the dApp access to that user’s encrypted storage (via Lit Protocol’s Lit Action) on Ceramic ComposeDB (with the bulky files like images on IPFS)

- Once connected, the user can interact with Pensieve Dao’s smart contracts on Filecoin’s FVM!

- The user can register a new event, thereby getting read and write access to that event, by proving their ownership of POAP NFT of that event (the algo groups photos based on POAP). The POAP NFTs for demo are created on FVM with their metadata stored on NFT.Storage.

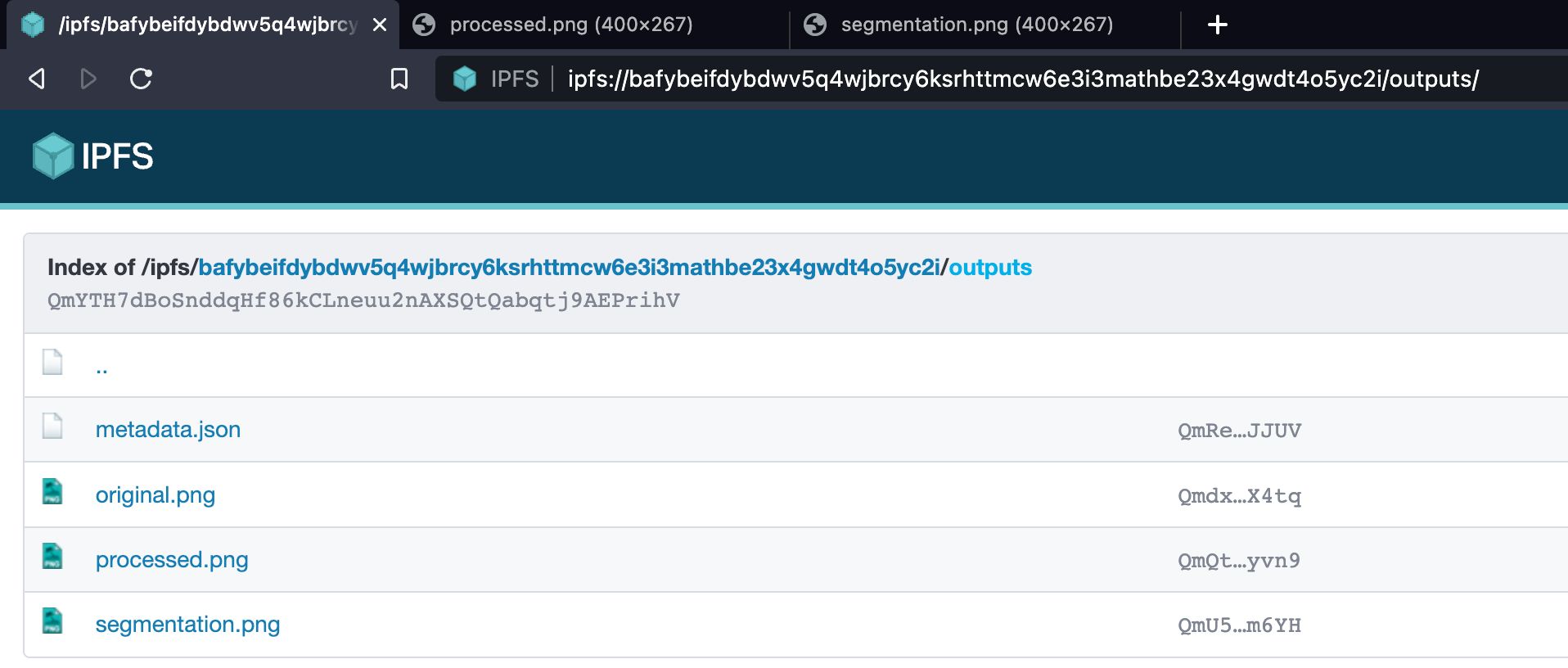

- When users upload photos, the photos are encoded then passed to Bacalhau’s containerized machine learning image processing flow which runs segmentation and timestamp photos based on Filecoin’s tipset height (queried from Beryx API). Bacalhau stores the processed output on IPFS.

- The client frontend queries the processed images from IPFS optimzed via Filecoin Saturn CDN. However, this CDN integration currently seems broken :(

#Some Future Directions

- Fix integration bugs and optimise integration flow (communication with Bacalhau is very slow right now!) 2.Allow people to vote on significance of the event and the events with significance score above a threshold will form our decentralised "HISTORY OF APES". 3.Allow people to challenge the data’s “trueness”. So that people cannot upload AI-generated fake pictures of things that never happened.

See our project trello board for a list of ideas that we never got to finish implementing!

How it's Made

#Image Processing and Upload (with Bacalhau) When you upload a picture to Pensieve, it automatically processes the photo with the following proceedure:

- Deep Learning Inference using fcn-resnet image segmentation to locate and detect scene objects (example)

- Harr face detection

- All detect faces are blurred and

- Cut Grab algorithm removed backgrounds from randomly selected Bored Apes

- The resulting Apes get placed on the blurred faces for aesthetics

- The image is timestamped with the tipset height of Filecoin (using Beryx API)

- Metadata and imagaes saved onto IPFS

The coolest part is that all the above was done in Python (a language not normally associated with Web3 development) by a datascience guy all thanks to Bacalhau. We discovered that the best way to iteratively develop on Bacalhau is to pass a specific python script and inputs to a Bacalhau node running a generic Docker image. It should be familiar to most data science people to think of Bacalhau as the virtual environment that you'd set up first first prior to running scripts or projects on. The following "nota benes" should be considered to make this design pattern work:

- Write a script to list out your specific dependencies to be used, this will help you test the Bacalhau node to make sure it's working for all future scripts that require the same dependencies (example)

- Optimize Dockerfile to reduce layers, image size & thus the Docker build and push times (example)

- Docker build with linux platform as the target, currently Bacalhau only has linux nodes (cite)

- Build and push your custome environment (hopefully once!). Do this early and be prepared for long build and push times.

- Avoid using API in scripts because Bacalhau has a whitelist of which networks can be reached from within the Bacalhau node. Use --network=full or --network=html and --domain=example.com to get connected.

- Bacalhau is default to be used by CLI, but we worked out how to use generic API endpoint!

- The entry point can carry data in string format. We used this to avoid uploading unprocessed photo to any host during the Pensieve upload process by passing the base64 string in the POST body. (example python)(example CLI)

- And now a big catch is how to make the call use JS. This is the key line (code)

The limitation right now is that using MediaRenderer to put ifps picture on to the app takes a while to work. We think the pinning needs to happen before the http gateway is resolved.