Mimiku

Mimiku offers a royalty-based model hub that enables IP providers to monetize their data and AI prompt engineers to generate content, with the royalty distributed fairly between both entities. By creating a sustainable AI-generated content market, Mimiku encourages innovation.

Mimiku

Created At

Winner of

🏊 Worldcoin — Pool Prize

🥉 Lens — Best Use

🏊♂️ Polygon — Pool Prize

1️⃣ Metamask — Best SDK Usage

Project Description

Mimiku is a blockchain-powered platform that incentivizes fair compensation for original creators and AI prompt engineers in the AI-generated content market.

As AI technology continues to advance, there's a huge demand for high-quality training data. And this led to uncontrolled misuse content of celebrities and creators without permission, and the IP providers are not getting any incentives on it. This is a big problem, and the fact that there aren't any laws protecting creators just makes it worse.

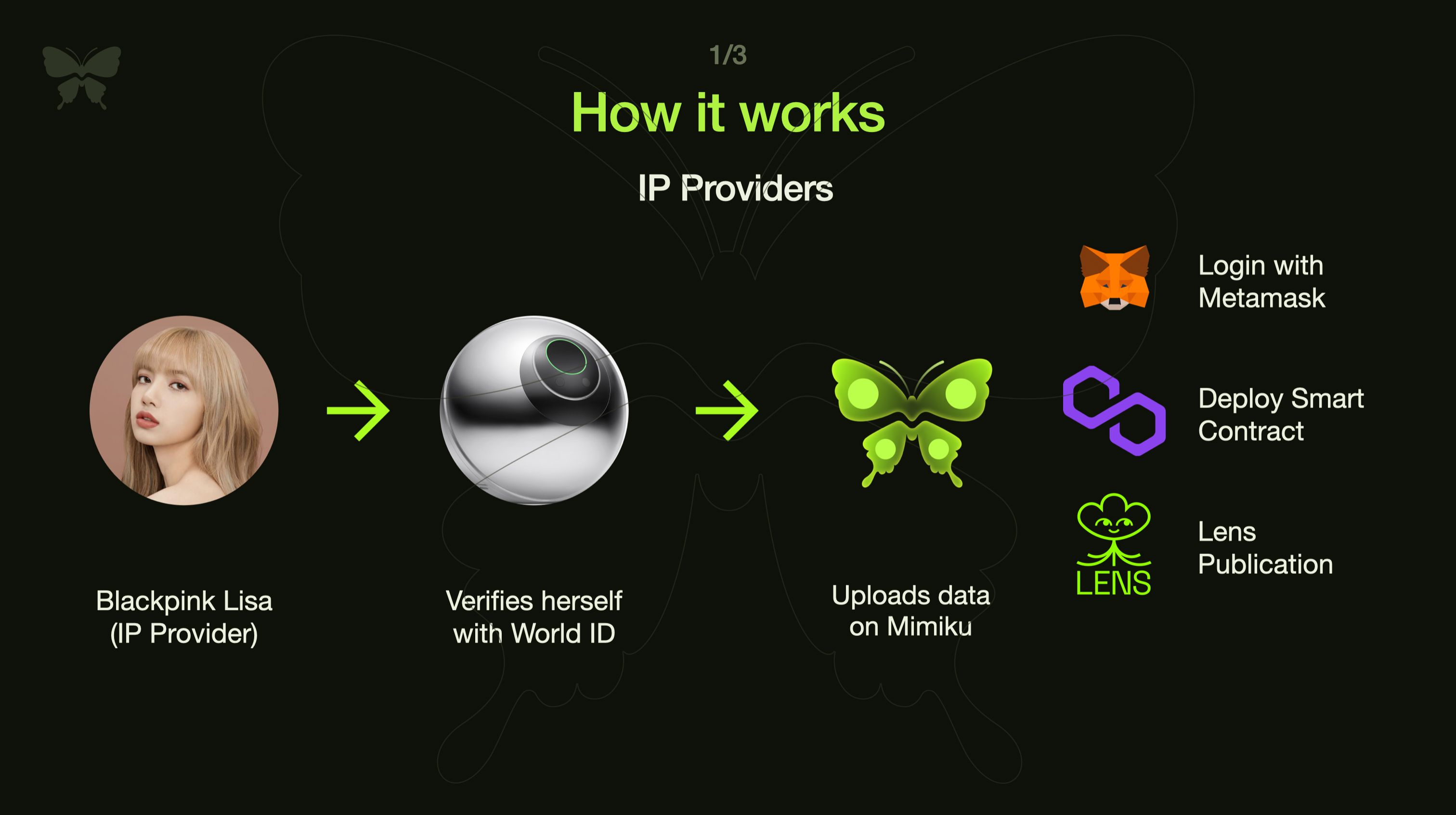

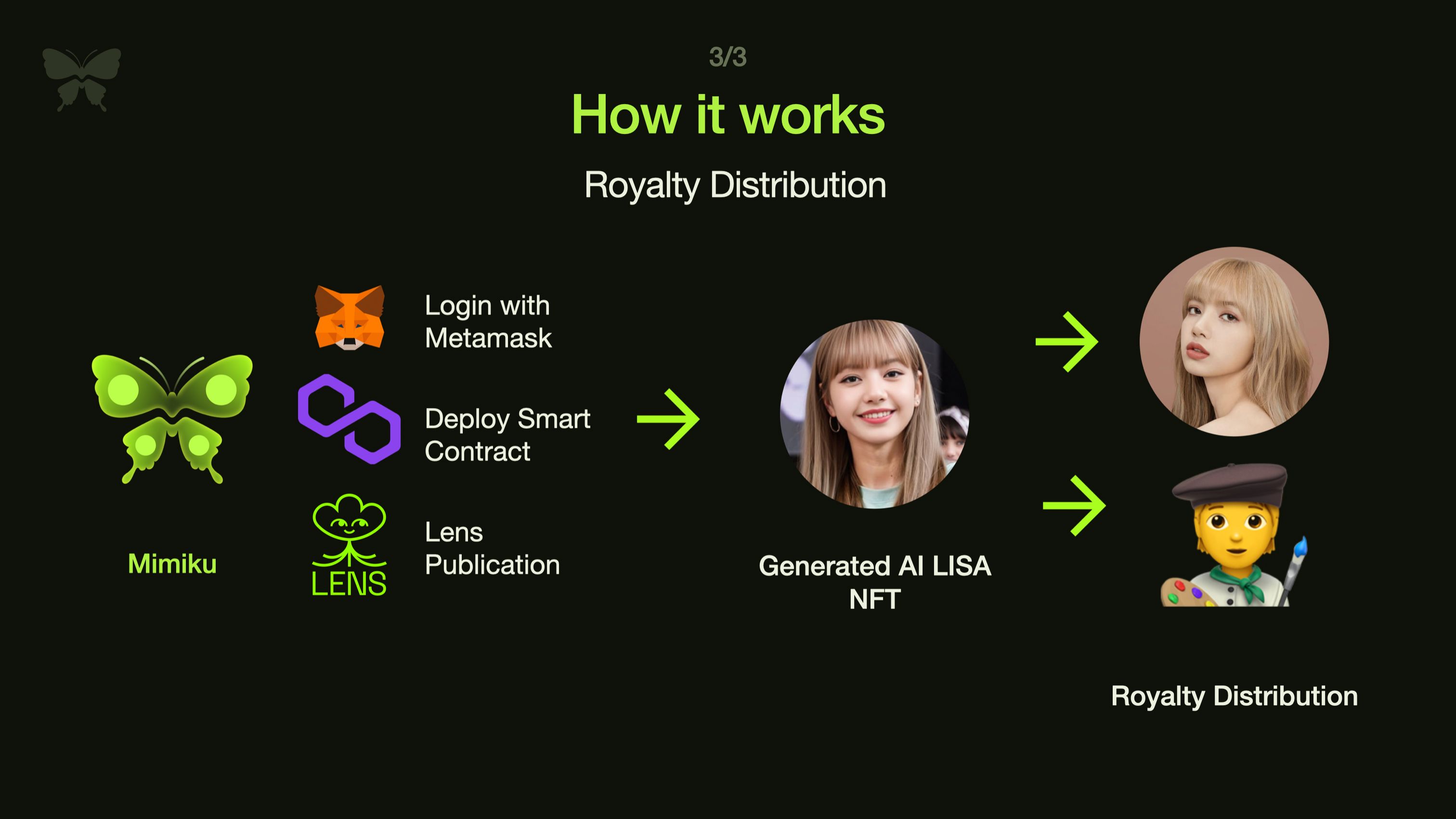

Mimiku seeks to address this problem by providing a royalty-based model hub for original and secondary creators. The platform allows IP providers to upload their data, which AI prompt engineers can then use to generate content. The royalty generated by the secondary content will be distributed to both the original and secondary creators in a fair and transparent manner.

By leveraging World ID, Mimiku provides the digital identity to the IP provider while fully protecting their privacy. Mimiku enables easy login access with Metamask using Metamask SDK. With Lens protocol, all transactions and royalty payments are distributed and recorded immutably on Polygon.

This incentivizes both original and secondary creators to contribute to the platform, driving the growth of a more sustainable and ethical AI-generated content market.

How it's Made

There are four parts to our project. Lens protocol

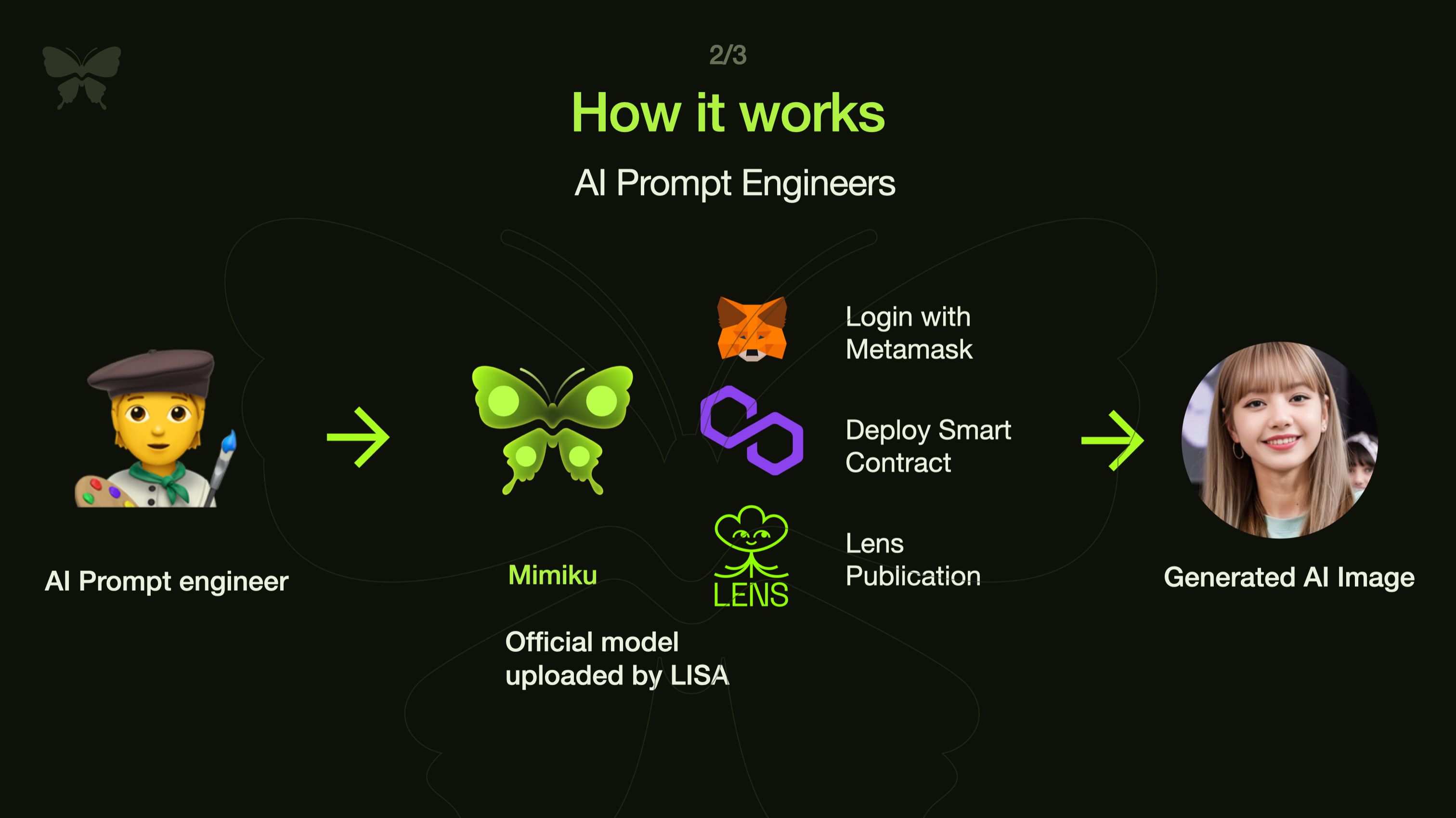

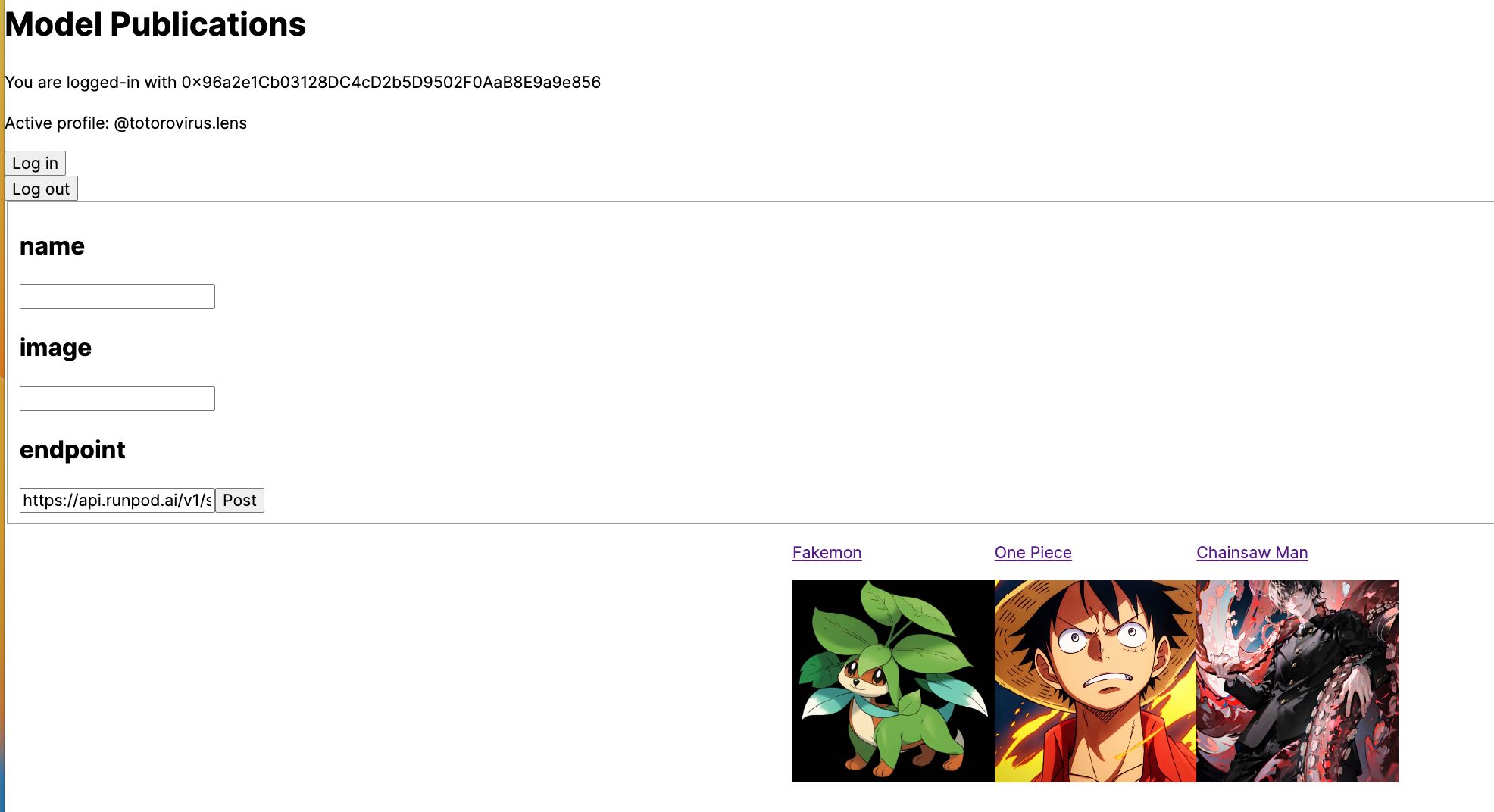

We have used lens protocol extensively to create an hierarchy of AI model trainer (creators) and prompt engineer. In lens terms, AI model holder creates a publication with an endpoint url that invokes the stable diffusion model to generate the image. The prompt engineer may generate an image with a prompt using the endpoint url of the publication, and create a comment referencing the original model publication. In addition to the generated image, the endpoint url also generates a zero knowledge proof which proves that image was indeed generated by the model that was published.

In order to verify the proof that the image was actually generated using published model, we have written an imaginary Lens Reference module ModelCheckpointReferenceModule, which verifies the referencing comment or mirror.

Our custom module https://github.com/jinmel/ethtokyo2023/blob/main/contracts/core/contracts/core/modules/reference/ModelCheckpointReferenceModule.sol

Usage of Lens-react to build UI components Login & Model publication https://github.com/jinmel/ethtokyo2023/blob/main/my-app/src/app/components/directory/directory.component.jsx Generating image and creating comments https://github.com/jinmel/ethtokyo2023/blob/main/my-app/src/app/components/PostForm/index.tsx

Polygon

We have used Lens protocol and Worldcoin to deploy our contract to the Polygon testate

https://github.com/jinmel/ethtokyo2023/blob/main/contracts/core/contracts/core/modules/reference/ModelCheckpointReferenceModule.sol

WorldCoin

Our Lens Module uses WorldCoin SDK to verify the model publisher is an authentic human, to prevent non-human publishing stable diffusion model

https://github.com/jinmel/ethtokyo2023/blob/main/contracts/core/contracts/core/modules/reference/ModelCheckpointReferenceModule.sol

MetaMask

Our dApp uses metamask SDK to login to Polygon Mumbai testnet

https://github.com/jinmel/ethtokyo2023/blob/main/my-app/src/app/components/directory/directory.component.jsx